This is part one in a series on getting beyond the basics with Azure ML.

In the prior series, Low-Code Machine Learning with Azure ML, we saw how to get started with Azure Machine Learning in a fairly pain-free way, especially for developers getting started with machine learning. In this series, I will assume that you already know all of those details and instead, we’re going to go full-code.

There are a few different ways in which we can go full-code with Azure ML. Today, we’re going to look at the easiest of those methods: using Jupyter notebooks within Azure ML Studio.

Jupyter Notebooks

Azure ML has built-in support for Jupyter notebooks. These notebooks execute on compute instances, meaning that you’re going to want to have one created and enabled.

Within notebooks, we can use the Azure ML Python SDK. The Python SDK includes classes for the different constructs in Azure ML, like datastores, datasets, environments, and runs, giving you an easily-accessible method for working with these constructs. Azure ML used to have an R SDK but they’ve deprecated it and the day I heard that, I needed to fly my flag at half mast.

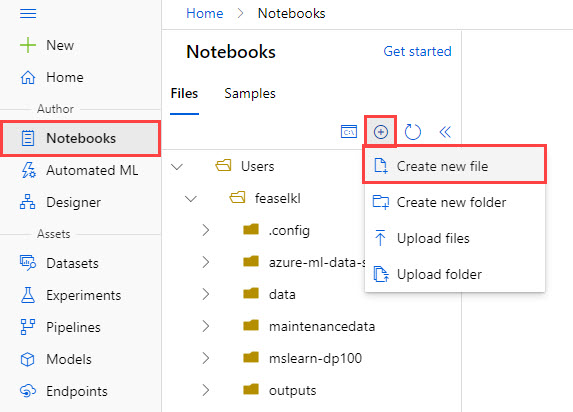

To create a new notebook, first navigate to the Notebooks item in the Author menu. Then, select the add option (+) and choose Create new file. By default, the file type will be a Notebook in .ipynb format.

Be sure that when you create the file, you leave the .ipynb in the File name section—this page will not automatically add the extension if you leave it off.

In case you are not familiar with Jupyter notebooks, the name Jupyter is a portmanteau of the three data science languages Julia, Python, and R. The idea is that Jupyter notebooks, running on Python, have support for a variety of languages by way of kernels. There are dozens of kernels available for your local installations of Jupyter but Azure ML only supports Python and R.

Notebooks provide us an easy-to-use interface for working with code. We can create blocks of code and execute them as though we were typing the code into the console. We can also incorporate text and instructions in markdown format, making these a nice way of combining instructions, code, and tips in one document.

Working with the Python SDK

Getting to any of the Python SDK elements we care about, such as workspaces, environments, datasets, and more is pretty straightforward. In this scenario, we’re going to read from a SQL Server database as our datastore, write a query to access data, and perform a simple bit of decision tree regression in Python. First, let’s load up all of the classes and names we’ll need.

from azureml.core import Workspace, Environment, Datastore, Dataset

from azureml.core.experiment import Experiment

from azureml.data.datapath import DataPath

from azureml.data import DataType

from azureml.core.run import Run

from azureml.core.model import Model

from sklearn.tree import DecisionTreeRegressor

from sklearn.metrics import mean_squared_error

import joblib

import numpy as np

import pandas as pd

from math import sqrt

ws = Workspace.from_config()

Most of this is available in the azureml.core namespace, though some items are in sub-namespaces and a couple of things are in a separate azureml.data namespace.

Aside from Azure ML, we include a couple of things from scikit-learn, specifically a decision tree-based regression algorithm and a metric which helps us report on how far off our estimated results are from actuals. We’ll use the joblib library’s dump function later on to save the results of our model to Azure Blob Storage. The rest of the packages—numpy, pandas, and math—are for numeric processing, data science, and mathematical operations, respectively.

The last thing we do in this block is load our workspace from a configuration file. When you create a compute instance, your instance details are automatically made available to the Python SDK, so you don’t need to store your subscription ID or workspace name. Those details are in a JSON file on disk and don’t get checked into source control.

Now that we have a workspace, let’s use it to get data from Azure SQL Database.

expenses_datastore = Datastore.get(ws, datastore_name="expense_reports")

query = """SELECT

er.EmployeeID,

CONCAT(e.FirstName, ' ', e.LastName) AS EmployeeName,

ec.ExpenseCategoryID,

ec.ExpenseCategory,

er.ExpenseDate,

YEAR(er.ExpenseDate) AS ExpenseYear,

-- Python requires FLOAT values--it does not support DECIMAL

CAST(er.Amount AS FLOAT) AS Amount

FROM dbo.ExpenseReport er

INNER JOIN dbo.ExpenseCategory ec

ON er.ExpenseCategoryID = ec.ExpenseCategoryID

INNER JOIN dbo.Employee e

ON e.EmployeeID = er.EmployeeID

WHERE

YEAR(er.ExpenseDate) < 2017;"""

queryTraining = DataPath(expenses_datastore, query)

data_types = {

'EmployeeID': DataType.to_long(),

'EmployeeName': DataType.to_string(),

'ExpenseCategoryID': DataType.to_long(),

'ExpenseCategory': DataType.to_string(),

'ExpenseDate': DataType.to_datetime('%Y-%m-%d'),

'ExpenseYear': DataType.to_long(),

'Amount': DataType.to_float()

}

queryTesting = DataPath(expenses_datastore, query.replace("YEAR(er.ExpenseDate) < 2017;", "YEAR(er.ExpenseDate) >= 2017;"))

training = Dataset.Tabular.from_sql_query(queryTraining, set_column_types=data_types).to_pandas_dataframe()

testing = Dataset.Tabular.from_sql_query(queryTesting, set_column_types=data_types).to_pandas_dataframe()

In the first line, we load a datastore that I created called expense_reports. This datastore points to Azure SQL Database.

From there, I have a fairly straightforward SQL query which accesses data in multiple tables in my database. Note that ExpenseReport.Amount is of data type DECIMAL in my table, but Python doesn’t like that datatype, so I need to cast it to a FLOAT first. Otherwise, I’ll get an error when trying to work with the data.

I then prepare the query as queryTraining. I will need to specify the data types to make it clear what each type is and to ensure that there are no translation difficulties when pulling the information from Azure SQL DB into a Pandas DataFrame. After setting up my training query, I have a testing query. The only difference between the two is that I’m using pre-2017 data for training and post-2017 data for testing, so I use the string replace() method in Python to make that swap.

The final two lines actually execute the query for my training and test data sets, respectively. I send in the prepared query and the column types, and I also tell Python that I’d like the results brought into a Pandas DataFrame, as DataFrames are the de facto method for working with most data science and machine learning libraries in the Python world.

I have data, so let’s now create an experiment and do something with that data.

# Begin experiment

experiment = Experiment(workspace=ws, name="ExpenseReportsNotebook")

run = experiment.start_logging()

# Fit the data to a decision tree

reg = DecisionTreeRegressor()

reg.fit(training[["ExpenseCategoryID", "ExpenseYear"]], training[["Amount"]].values.ravel())

# Generate predictions based on the trained model

pred = pd.DataFrame({"AmountPrediction": reg.predict(testing[["ExpenseCategoryID", "ExpenseYear"]]) })

# Concatenate testing data with predictions

testdf = pd.concat([testing, pred], axis=1)

# Calculate the root mean squared error

rmse = sqrt(mean_squared_error(testdf["Amount"], testdf["AmountPrediction"]))

Experiments work just like in the designer: they are a helpful way of grouping together executions of a process. Each execution is called a run and we get our run by calling the start_logging() method on our experiment. Once those are done, I fit the data to a decision tree, feeding it the expense category ID (essentially, how expensive a city is) and the year (dealing with things like inflation) and I want to use those two values to predict the amount of an expense report. I then generate my predictions based on the testing dataset, add the new AmountPrediction column to my test DataFrame, and calculate the error between the actual amount of the expense report versus my predicted amount.

Note that none of the code in this block after the first two lines is Azure ML-specific. This is all “normal” Python for data scientists, so we don’t need to use Microsoft algorithms or Microsoft-specific processes to handle the work. We now have trained a model, performed testing, and figured out how far our model is from reality in terms of root mean squared error. Let’s get back to some Azure ML-specific operations.

# Log the overall rmse

run.log('RMSE', rmse)

print()

print('#############################')

print('RMSE is {}'.format(rmse))

print('#############################')

print()

# Log each employee's name, expense category, and RMSE

employees = testdf.groupby(['EmployeeName', 'ExpenseCategory'])

for cat, grp in employees:

empname, expcat = cat

rmse = sqrt(mean_squared_error(grp["Amount"], grp["AmountPrediction"]))

rescat = ('{}, {}, RMSE'.format(empname, expcat))

run.log(rescat, rmse)

# Save the model and upload it to the run

model_file_name = 'outputs/model.pkl'

joblib.dump(value = reg, filename = model_file_name)

# Typically, the run.upload_file() method would be used to capture saved files

# However, as per the Azure documentation, files stored in the outputs/ directory are automatically captured by the current Run

# Complete the run

run.complete()

# Register the model with the workspace

model = run.register_model(model_name = 'ExpenseReportsNotebookModel', model_path = model_file_name)

The log() function allows us to log measures. We can print the measures to the notebook itself, but notebook results are transitory in the sense that we can erase and re-run the notebook, possibly getting different results. The logged details in a run will stick around for a while.

After printing the overall RMSE, we want to get the root mean squared error per combination of employee name and expense category. The idea behind this dataset is that there were some differences in behavior due to some people trying to cheat the system starting in 2017. We want to track each person in each category to see who was responsible and how much we lost as a result of their cheating.

After we write those results to the log, we then use the dump() function on joblib to write out our regression model, serializing it in pickle format. Once we’re done here, we complete the run and register the model we’ve created.

The only output we get from the notebook looks like this:

#############################

RMSE is 14.054870519339469

#############################

That tells us that our model for predicting expense reports is off by approximately $14.05 per expense report, which is pretty bad. But the reason it’s off is due to fraud and we can see it pretty clearly by navigating to the Experiments tab and selecting our ExpenseReportsNotebook experiment.

Inside there, we have at least one run. Select the latest run and you’ll see some details about it.

The Metrics box shows us some details right of the bat, especially if you know what you’re looking for. Specifically, Jack Aubrey, William Mowett, and Emily Sweeting all have root mean square errors in expensive cities of somewhere around $5 per report, whereas William Babbington has one over $15. If we navigate to the Metrics page, we can focus in on just the inexpensive cities and see it clearly.

We can see that 5 of the 12 people were involved in a bid to submit artificially high expense reports, causing our model to be off by $15 per report rather than the natural $5 we get with the others.

Conclusion

In today’s post, we got a feeling for how we can use Azure ML notebooks to perform machine learning work. In the next post, we’re going to make it even more personal, performing data science inside your own home!

One thought on “Beyond the Basics with Azure ML: Working with Notebooks”