I’m going to tell this one in story format, so here’s the short of it up front:

tl;dr — Clustered columnstore indexes don’t like the combination of wipe-and-replace with multi-threaded trickle loaders. Avoid that pattern.

The Setup

In the olden days, we had a large fact table with a standard clustered index and some standard non-clustered indexes. Because the primary use of this fact table was to aggregate fairly large amounts of data, performance was less than stellar.

Then came SQL Server 2014, with its promises of updatable, clustered columnstore indexes. I jumped on this immediately and replaced my large fact table with one built off of a clustered columnstore index. Initial testing looked great, giving me a 2-5X performance gain depending upon the query, and that was good enough for our PM to authorize moving forward with the project.

After that project went live, all was well…for about a week. Then things started slowing down. It took a while before we were able to tell that there was a problem and, from there, find the root cause.

The Root Cause

To understand the problem, let’s talk a little about our infrastructure. We load the warehouse on a per-client, per-day basis and we have a number of processes which load data concurrently. In other words, one process loads data for Client A on June 1st while another process may load data for Client B on June 1st and a third process loads data for Client C on June 2nd, all at the same time. Loading data includes two steps: deleting current data and loading new data. The first step of deleting current data happens because it turned out to be much more efficient in our scenario to delete old records and then insert new records rather than trying to merge data in (either using the MERGE keyword or combination INSERT/UPDATE/DELETE statements).

There are two major issues that we experienced with this pattern against a clustered columnstore index in SQL Server 2014. First, there was no way to reorganize or rebuild the index online in SQL Server 2014, meaning that the only way I could clean up deleted records would be to rebuild an entire partition. Given that our hot load partitions are also hot access (specifically, the current and prior months) and we’re a 24/7 company, rebuilding those partitions is pretty much out of the question. This means that I wouldn’t be able to clean out partitions which are full of deleted records. That means that my compressed columnstore rowgroups were woefully under-populated.

At the same time, we experienced large numbers of open rowgroups in the deltastore, many of which contained just a few records. My best understanding of why this happened is as follows: when a process goes to delete records for a customer-day combination, that process can lock multiple deltastore rowgroups. If other processes are trying to insert data into the deltastore while that first process tries to delete records, they’ll open new rowgroups because the current ones are locked. After a while, we’d end up with hundreds or thousands of open rowgroups in the deltastore, many of which contained well under 10,000 rows apiece but which added up to tens of millions of records in total. Given the way the deltastore works (it’s a big heap), having to scan a giant heap made our queries slower. The worst part is that because these rowgroups tended not to grow much in size, the tuple mover wouldn’t do anything with them, so they’d just accumulate as new deltastore rowgroups get created and populated.

SQL Server 2016 gave me the ability to reorganize indexes online, which was a great addition as it allowed us to keep those columnstore tables online while reorganizing the partitions and smashing together all of those open rowgroups and combine together the mostly-empty rowgroups. But that’s not a real solution to the problem; it just buys a bit of time and masks the symptoms.

Possible Solutions

Now we’re in the architecture portion of today’s post. There are three potential solutions I want to bring up, two of which I’ve implemented in production at one time or another.

Rowstore Front-End

The first architecture involves putting a rowstore table in front of the columnstore.

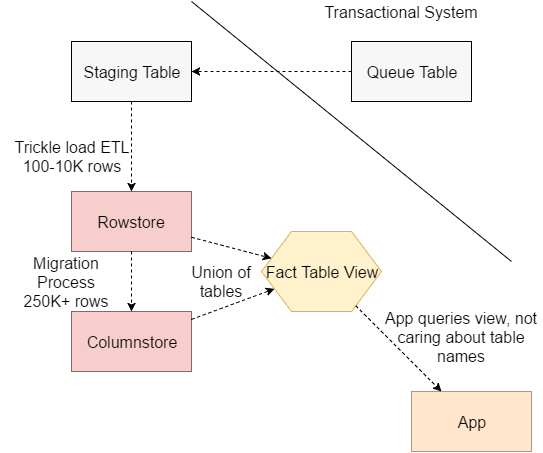

In this design, I have data coming from the transactional system, undergoing some ETL processing, and going into a staging table on the warehouse. From there, I perform the remainder of the ETL work and insert into a rowstore table. This rowstore table has the same attribute names and data types as the columnstore table, but instead of having a clustered columnstore index, it has a standard B-tree index and can have additional non-clustered indexes. From there, I expose the combination table using a view which simply unions the two sets of data so the application doesn’t have to see rowstore versus columnstore tables.

To move data from the rowstore to the columnstore, I have an external migration process. This migration process waits until one of two conditions is met: either there are at least 250,000 records in a single partition, or there is data from at least 4 partitions ago. In other words, for last three months (including the current), I’d hold off on migrating data until I hit the magic number of 250K records, so that I could migrate that as a single batch and bulk insert the results, bypassing the deltastore altogether. For older data, my expectation was that these are typically one-off or smaller data moves, and so waiting for 250K records was folly, as that might never come. Instead, move those immediately to keep the rowstore table compact. The migration process I wrote looked at data by partition, so I could pull in data from 6 months ago while still waiting for the current partition to accumulate enough records to make that move worthwhile.

Advantages

- I get immediate access to the data once it makes its way into the rowstore. This gets me access to the data earlier than the other alternatives.

- It solves one of the pain points I expressed above. Hot records don’t go into the deltastore, so we don’t see a proliferation of open rowgroups. Also, depending upon how quickly we reload data, it might solve the other problem as well: if data doesn’t get reloaded very frequently, letting it sit in a rowgroup for a day or so means that if we delete and reinsert data, we aren’t deleting from the clustered columnstore index.

Disadvantages

- This is a relatively complex design to implement, especially with a zero down-time release and a significant amount of existing code looking for tables.

- If a huge number of rows get into the rowstore table, query performance won’t be that great because we’re unioning rowstore values and columnstore values and performing calculations later (which negates some of the effect of having a columnstore index).

- If I have to reprocess months worth of data, that columnstore gets hit hard.

- It seems harder to follow. I had other database engineers regularly ask about these tables and some of our software engineers and testers found them vexing.

- You have to keep two tables in sync, so whenever I add a column to one side, I have to add the column to the other side and to the migration procedure and to the view.

I used this model for SQL Server 2014, but then removed it after we moved to SQL Server 2016 and went back to direct columnstore insertion. My hope was that I would not need to re-implement something for 2016, but that ended up not being the case.

Staging Table Direct Insertion

Once I started experiencing the same problems in SQL Server 2016, I had to act. Instead of once more putting a rowstore in front of my columnstore table, I decided to increase complexity in the ETL process to simplify application querying. To wit, I created a two-step load process. The first step of the load process, moving data from our transactional system into the warehouse, remains a trickle load, inserting records for a customer-date combination into a memory-optimized staging table. Once that load process is complete, the ETL process inserts a row into a warehouse queue table, letting the next step know that this customer-day combo is ready to go.

From there, I let the staging table grow a bit and run a job periodically to bulk move the staging table rows into the columnstore. Now I delete and insert larger chunks of rows, usually in the 250-800K range. This means that I avoid the deltastore completely and get a pretty decent row count in each compressed columnstore rowgroup.

Advantages

- Seamless post-ETL process. No new tables and no views.

- Calculations don’t need to hit the rowstore table, so I can take full advantage of columnstore aggregations.

Disadvantages

- Data loads are no longer “instantaneous.” I have to wait a bit longer for the second step of the process to commit before records show up in the warehouse.

- Additional ETL complexity means there are more things that could break.

- There is a possibility of weird data issues. For example, if I’m trying to load a customer-day combo while the first phase of the ETL process is trying to re-insert that data, I could get inconsistent results. I needed to add in checks preventing this from happening.

This is my current architecture. I’m happier with it than with the original rowstore table architecture.

Bulk Insertion

The third architecture is simple: don’t do trickle load at all. For many companies, it’s okay to do a major load of data once a day or once every several hours. If that’s the case, I’d recommend doing a bulk insertion over trying to implement a trickle load. Just like the other two methods, bulk insertion bypasses the deltastore when you’re loading enough records.

Ideally, this bulk insertion would be a straight insert, never updating or deleting data. If you can get away with it, the ideal pattern would be something like this:

If I need to load data for July 5th, I’m going to load all of the data for the partition which contains July 5th into a new table with that partition, and then I’m going to swap out the corresponding partition on the columnstore side. I would want to partition by the normal load unit—for example, if we load data monthly, I’d partition by month; if we load data daily, I’d partition by day if possible.

Advantages

- No deletes from the columnstore means no fragmentation.

- Columnstore rowgroups are as packed as possible. If there’s any doubt, we can run an index rebuild on the new partition before swapping it, as nothing reads that table.

- Just like the staging table direct insert, I don’t need to make any application changes or create new supporting objects outside of ETL.

Disadvantages

- Data loading must be periodic, and will probably be slower than trickle loads. You’re probably loading once a day or once a month at this point.

- If just one row changes, you have to rebuild the entire partition. That can be a time sink when partitions get very large.

This is the “ideal” solution, but making it work when customers expect data in less than 20 minutes is tricky. The staging table direct insert architecture seems to be a reasonable compromise between spray loading data and waiting a long time for data.

Use SQL Server 2016

Regardless of the architecture, SQL Server 2016 is a must-have for clustered columnstore indexes. The ability to reorganize indexes online is a life-saver. There is the possibility for these reorganizations to block queries for short amounts of time, but that’s a huge benefit if you do find yourself deleting a lot of data in the columnstore.

Conclusion

In this post, we looked at three architectures for loading data into columnstore indexes, with a special focus on trickle loading data. The common denominator for all of these is good staging tables to absorb the first wave of changes and move data into the columnstore in bulk.

3 thoughts on “Loading Into Columnstore: Avoid Trickle Loads”