Last week, I put together an analysis of SQL Saturdays. There were a lot of great comments, including one by Jack Corbett, who was interested in seeing a closer analysis of how far attendees tend to travel:

Most people from Maine aren’t going to anything outside of New England, and most aren’t going to go south of Boston. Seems to limit opportunities for attendees. Instead of analyzing the distance between locations, let’s look at how far non-speakers are traveling to go to events and limit based on that.

Fortunately(?), I happen to have just the data set. PASS has made certain Summit and SQL Saturday data available as part of the SQL Server Launch 2016 Discovery Days. Now, let me warn you: this is going to be a long blog post. But hey, it’s Friday, so cozy on up with your beverage of choice and let’s get data scienceing.

Dirty Data Done Dirt Cheap

Airing of Grievances

Before I begin, allow me to perform the data science Airing of Grievances. This is an important part of data analysis which most people gloss over, instead jumping right into the “clean up the dirty data” phase. But no, let’s revel in its filth for just a few moments.

- There are some strange data type choices here. For example, UserGUID (which is the deidentifier for individuals) is a uniqueidentifier on PASSMembersWithGeolocation, but is a different type in SQLSatRegistrations. There are some other data type choices which aren’t ideal (for example, the SQL Saturday number is a float?!), but that’s not a huge deal.

- What is a huge deal is that some of the postal codes are wrong. If you look at New Hampshire addresses, the postal codes have leading 0s lopped off. That came back to burn me in my first analysis, requiring me to re-run my geolocation function.

- There is no breakout by event; we only see a denormalized junction of SQL Saturday events and attendees. That can make calculations a bit harder, so I’d like to normalize this a bit.

- The second-biggest issue is that the data set is, in my opinion, incomplete. There are a few reasons why:

- We only have data for people who registered, not for people who attended. There might be some pattern in people who registered but did not attend, but we won’t be able to see that. There are also going to be people who attended but did not register; these day-of attendees aren’t going to be in the system, but we let them in anyhow if we have room. I think those attendees would skew toward “local” rather than “distant” drive times, meaning that you’re not going to travel 500 miles to sneak into an event for which you didn’t register…usually.

- There is no separation of speakers, sponsors, and “normal” attendees. Speakers and sponsors travel across the country to visit these things (we’re weird that way), but the big question is, how far do non-speaking, non-sponsoring attendees normally travel? I have no way of knowing for sure who is which, so I’ll try to answer that in the data cleansing section.

- This analysis is only through about April of 2016. There have been several events since, which could affect our analysis, but I don’t have that data.

- There are lots of missing fields. For example, there are 9 American SQL Saturdays which had no postal code set. There are also a lot of attendees with missing data—they didn’t fill in the city or the postal code or the region (state). There are even attendees with zero information filled out, and those are just going to be useless for our examination.

- This leads to my biggest issue: nasty data.

- There are no controls on data entry. If you want to set city to “Nope, not gonna tell” then it’ll save that.

- Furthermore, all of the entries are free-form. There are no checks to ensure that a postal code is valid, that a city exists, etc.

- In short, there are a lot of misunderstandings, miscodings, mistrust, and misanthropy in this data set.

Limitations of Analysis

Given the issues in this data set, I have to make a couple tough calls with the data. First, I’m only going to look at US attendees of US SQL Saturdays. I wanted to look at US and Canada SQL Saturdays like I did in my last analysis, but I had a lot of geocoding problems with Canadian data, so I had to throw that away. Also, I needed to throw away a lot of attendee data because I simply didn’t have enough to get a valid latitude and longitude. So we’ll have to go ahead knowing that our analysis is incomplete and any conclusions we draw will be uncertain—but that’s the nature of statistical analysis, so we’ll trudge on nonetheless.

Doing Data Science

Initial Preparation

Grab the PassDataIndividualTables data set. We’re going to create a database called PASSData and load the tables which are not named “_xlnm_FilterDatabase.” Once we have that, I did some basic data cleansing work. I made UserGUID a uniqueidentifier across the board and changed SatNum to an INT instead of a FLOAT. Then, I built a couple of tables: dbo.SQLSaturday and dbo.SQLSaturdayAttendee. First, dbo.SQLSaturday:

CREATE TABLE dbo.SQLSaturday

(

EventNumber INT NOT NULL,

EventDate DATE NULL,

EventName NVARCHAR(50) NOT NULL,

StreetAddress NVARCHAR(100) NOT NULL,

City NVARCHAR(100) NOT NULL,

Country NVARCHAR(100) NOT NULL,

PostalCode VARCHAR(14) NULL,

Latitude DECIMAL(8,4) NULL,

Longitude DECIMAL(8,4) NULL,

GeoPoint AS GEOGRAPHY::Point(Latitude, Longitude, 4326),

CONSTRAINT [PK_SQLSaturday] PRIMARY KEY CLUSTERED(EventNumber)

);

INSERT INTO dbo.SQLSaturday

(

EventNumber,

EventDate,

EventName,

StreetAddress,

City,

Country,

PostalCode

)

SELECT DISTINCT

s.SatNum,

ss.EventDate,

s.EventName,

CASE

WHEN s.StreetAddress IS NULL AND s.VenueName = 'N/A' THEN ''

WHEN s.StreetAddress IS NULL AND s.VenueName <> 'N/A' THEN s.VenueName

ELSE s.StreetAddress

END,

s.City,

s.Country,

s.PostalCode

FROM dbo.SQLSatRegistrations s

OUTER APPLY

(

SELECT TOP(1)

EventDate

FROM dbo.SQLSatSessions sess

WHERE

sess.SatNum = s.SatNum

) ss

WHERE

s.Country IN ('United States');

--Tacoma 2009 and Jacksonville 2008 (#20) did not have dates set. Set them manually.

UPDATE dbo.SQLSaturday SET EventDate = '2008-09-12' WHERE EventNumber = 20 AND EventDate IS NULL;

UPDATE dbo.SQLSaturday SET EventDate = '2009-04-01' WHERE EventNumber = 11 AND EventDate IS NULL;

I added Latitude and Longitude so that I could geocode data, and then a Geography data type so that I can calculate distances. Notice that there were two SQL Saturdays which did not have dates. I’m prety sure that Tacoma (event #11) never took place, but I updated it and Jacksonville’s second 2008 event regardless, just to make sure everything was accurate.

Next up, I want to create a table for attendees.

CREATE TABLE dbo.SQLSaturdayAttendee

(

UserGUID UNIQUEIDENTIFIER NOT NULL,

City NVARCHAR(50) NULL,

Region NVARCHAR(50) NULL,

Country NVARCHAR(50) NULL,

PostalCode NVARCHAR(50) NULL,

NumberAttended INT NOT NULL,

Latitude DECIMAL(8,4) NULL,

Longitude DECIMAL(8,4) NULL,

GeoPoint AS GEOGRAPHY::Point(Latitude, Longitude, 4326),

CONSTRAINT [PK_SQLSaturdayAttendee] PRIMARY KEY CLUSTERED(UserGUID)

);

INSERT INTO dbo.SQLSaturdayAttendee

(

UserGUID,

City,

Region,

Country,

PostalCode,

NumberAttended

)

SELECT

p.UserGUID,

p.City,

p.Region,

p.Country,

p.PostalCode,

COUNT(1)

FROM dbo.SQLSatRegistrations r

INNER JOIN dbo.PASSMembersWithGeolocation p

ON r.UserGUID = p.UserGUID

WHERE

r.Country in ('United States')

AND

(

(p.city IS NOT NULL AND p.region IS NOT NULL)

OR (p.postalcode IS NOT NULL)

)

AND ISNULL(p.Country, 'United States') = 'United States'

GROUP BY

p.UserGUID,

p.City,

p.Region,

p.Country,

p.PostalCode;

--Fix cases when postal codes had leading 0s lopped off.

UPDATE dbo.SQLSaturdayAttendee

SET

PostalCode = RIGHT('00000' + PostalCode, 5)

WHERE

LEN(PostalCode) < 5;

I have the same Latitude, Longitude, and GeoPoint attributes, and we’ll make use of those later. I also put on a NumberAttended attribute, which sums up the number of times a person has attended a SQL Saturday. I’m limiting the attendee set to those who attended events in the United States (r.Country) and who have either City+Region or PostalCode set. Then, I’m going to fix postal codes, adding leading 0s to ZIP Codes missing them.

Quick Analysis

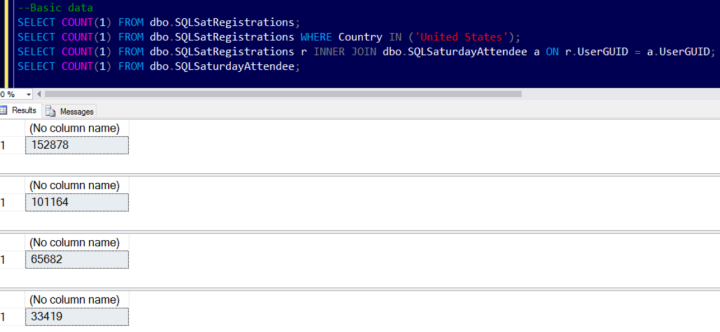

We’re far enough along that I can do some quick data set analysis and see what we’ve got.

--Basic data

SELECT COUNT(1) FROM dbo.SQLSatRegistrations;

SELECT COUNT(1) FROM dbo.SQLSatRegistrations WHERE Country IN ('United States');

SELECT COUNT(1) FROM dbo.SQLSatRegistrations r INNER JOIN dbo.SQLSaturdayAttendee a ON r.UserGUID = a.UserGUID;

SELECT COUNT(1) FROM dbo.SQLSaturdayAttendee;

There are 152,000 registrants in the data set, of which 101,164 registered for United States events. Our data set contains 33,419 distinct attendees, who make up 65,682 of the 100K United States event registrants.

Geocoding Time!

I’m going to do three separate rounds of geocoding. First, let’s geocode the SQL Saturday events. There are only about 300 of them, so I can use the same patterns that I used in my previous post. So let’s move over to some R code for a bit.

install.packages('RDSTK', repos = "http://cran.us.r-project.org")

install.packages("ggmap")

install.packages("RODBC")

install.packages("dplyr")

install.packages("httr")

install.packages("rjson")

install.packages("lubridate")

library(RDSTK)

library(ggmap)

library(RODBC)

library(dplyr)

library(lubridate)

conn <- odbcDriverConnect("Driver=SQL Server;Server=.;Initial Catalog=PASSData;Provider=SQLNCLI11.1;Integrated Security=SSPI")

sqlsats <- sqlQuery(conn, "SELECT EventNumber, StreetAddress, City, Country, ISNULL(PostalCode, '') AS PostalCode from PASSData.dbo.SQLSaturday")

sqlsats$GeoLookup <- paste(sqlsats$StreetAddress, sqlsats$City, sqlsats$Country, sqlsats$PostalCode, sep = " ")

# Perform Google Maps geocoding. Google Maps provides 1000 geocoding hits per day for free.

# Our data set is ~300 observations, so we can do that without issue.

sqlsats <- cbind(sqlsats, t(sapply(sqlsats$GeoLookup, geocode, USE.NAMES=FALSE)))

#A few records failed to get lat/long

#I will handle them as one-off updates in SQL.

sqlsats

sqlsats.good <- filter(sqlsats, !is.na(lon))

sqlsats.good

# Via http://stackoverflow.com/questions/14334840/how-to-insert-a-dataframe-into-a-sql-server-table

# Not a great practice, but for 300 rows, it'll do.

cmd <- paste("UPDATE PASSData.dbo.SQLSaturday SET Latitude = ",sqlsats.good$lat,", Longitude = ",sqlsats.good$lon," WHERE EventNumber = ", sqlsats.good$EventNumber, sep="", collapse=";")

result <- sqlQuery(conn, cmd, as.is=TRUE)

The first dozen lines or so are loading lots of packages. I’m not going to use all of them for this first section, but we’ll need them later. Then, we make a connection to SQL Server and grab data from the SQLSaturday table. I create a GeoLookup column to make lookups a bit easier.

The next line, where we do the geocoding, is a really cool thing you can do in R. Basically, I want to take my sqlsats data frame and append latitude and longitude to it. That’s what cbind (column bind) gives us. To understand the t(sapply(…, geocode, …)) bit, you have to work from the inside out. The geocode function is what we use to get latitude and longitude from Google Maps given an address. We want to apply that function to every observation in the sqlsats data frame (in T-SQL parlance, every row in the table), so we use the sapply variant of the apply function, and use the t() function to transpose the results so we can append them to our sqlsats data frame.

From there, I filter out any SQL Satudays missing longitude and update my SQLSaturday table with the longitudes and latitudes we grabbed.

As mentioned, there are some SQL Saturdays missing latitude and longitude, so I had to do lookups manually. There were also a couple of SQL Saturdays which were miscoded because they didn’t have states listed.

--Manual cleanup UPDATE dbo.SQLSaturday SET Latitude = 30.2782, Longitude = -81.511850 WHERE StreetAddress = '1 UNF Drive'; UPDATE dbo.SQLSaturday SET Latitude = 41.6618, Longitude = -91.5365 WHERE StreetAddress = '101 Jessup Hall'; UPDATE dbo.SQLSaturday SET Latitude = 45.5026, Longitude = -73.5735 WHERE StreetAddress = '2000 McGill College, 5e étage'; --Columbia was marked as W. Columbia in New York, rather than Columbia South Carolina UPDATE dbo.SQLSaturday SET Latitude = 33.9518, Longitude = -81.1157 WHERE City = 'West Columbia'; --Richmond was miscoded as well for 2010. UPDATE dbo.SQLSaturday SET Latitude = 37.6488, Longitude = -78.7015 WHERE EventNumber = 30;

Next up, I want to geocode the attendee data. I’m going to have to do this a different way for a couple of reasons. First, we’re going to have way more than 1000 attendees, so I can’t use Google Maps. Instead, I’m going to use the OpenStreetView data set to perform free geocoding. It’s slower than the Google API, but at least I don’t have to pay…

I’m going to get two subsets of attendees: those who put in a valid ZIP Code (and I can throw out everything else because typically, ZIP Codes are more distinctive than cities), and those who put in valid-ish city + region combos.

--Postal codes

SELECT DISTINCT

a.Country,

a.PostalCode

FROM dbo.SQLSaturdayAttendee a

WHERE

a.PostalCode IS NOT NULL;

--Approximately valid cities

SELECT DISTINCT

a.City,

a.Region,

a.Country

FROM dbo.SQLSaturdayAttendee a

WHERE

a.PostalCode IS NULL

AND LEN(City) > 2

AND LEN(Region) > 1

AND City NOT LIKE '%[0-9]%' --Some people put in street addresses

AND Region NOT LIKE '%[0-9]%' --Some people put in strange values

--Some people are very regional

AND Region NOT IN ('Midwest', 'North', 'South', 'North America', 'mid-atlantic', 'East', 'IN', 'this one', 'Mountain West', 'NA', 'N/A')

AND City NOT LIKE '%@%' --Some people put in e-mail addresses

AND City <> 'N/A' --Some people don't like listing city

AND City <> 'NA'

ORDER BY

a.City,

a.Region;

There are 6544 distinct postal codes in the data set, and 2406 distinct city-region pairings, adding up to 8950 lookups we’ll need to do. These 8950 lookups will apply to upwards of 33,273 attendees (which you can get by removing the DISTINCT clauses). At this point, I want to mention that we’re down to about 30K attendees from our original 93K data set (that is, there were 93,838 unique UserGUIDs in dbo.SQLSatRegistrations). If I were trying to do predictive analysis, I’d start to get really concerned here because we’re throwing away 2/3 of the attendee set. But let’s soldier on, because 30K attendees should still at least give us some idea.

Geocoding this time, as I mentioned, will be a bit trickier. Let’s get to the R:

# From http://stackoverflow.com/questions/22887833/r-how-to-geocode-a-simple-address-using-data-science-toolbox

# My own modifications include the if-else block for lookup failures

geo.dsk <- function(addr){ # single address geocode with data sciences toolkit

require(httr)

require(rjson)

url <- "http://www.datasciencetoolkit.org/maps/api/geocode/json"

response <- GET(url,query=list(sensor="FALSE",address=addr))

json <- fromJSON(content(response,type="text")) if(length(json['results'][[1]]) > 0) {

loc <- json['results'][[1]][[1]]$geometry$location

return(c(address=addr,long=loc$lng, lat= loc$lat))

} else {

return(c(address='',long=0,lat=0))

}

}

zips <- sqlQuery(conn, "SELECT DISTINCT a.Country, a.PostalCode FROM PASSData.dbo.SQLSaturdayAttendee a WHERE a.PostalCode IS NOT NULL;")

zips$CheckString = paste(zips$PostalCode, zips$Country, sep = " ")

zips$lat <- 0

zips$long <- 0

result <- do.call(rbind,lapply(as.character(zips$CheckString),geo.dsk))

zips <- data.frame(result)

zips

head(zips)

sqlSave(conn, zips, tablename="ZipStage")

cities <- sqlQuery(conn, "SELECT DISTINCT a.City, a.Region, ISNULL(a.Country, '') AS Country FROM PASSData.dbo.SQLSaturdayAttendee a WHERE a.PostalCode IS NULL AND LEN(City) > 2 AND LEN(Region) > 1 AND City NOT LIKE '%[0-9]%' AND Region NOT LIKE '%[0-9]%' AND Region NOT IN ('Midwest', 'North', 'South', 'North America', 'mid-atlantic', 'East', 'IN', 'this one', 'Mountain West', 'NA', 'N/A') AND City NOT LIKE '%@%' AND City <> 'N/A' AND City <> 'NA' ORDER BY a.City, a.Region;")

cities$CheckString = paste(cities$City, cities$Region, cities$Country, sep = " ")

cities$lat <- 0

cities$long <- 0

result <- do.call(rbind,lapply(as.character(cities$CheckString),geo.dsk))

cities <- data.frame(result)

cities

sqlSave(conn, cities, tablename="CitiesStage")

I copy-pasted the geo.dsk function from Stack Overflow and added in an if-else statement to check to see if json[‘results’] has values. Basically, we’re hitting the Data Science Toolkit’s geocoding API endpoint with the partial address (postal code or city + state) we have, and then we’re shredding the returned JSON to get latitude and longitude. If the lookup failed for whatever reason, I return a latitude and longitude of 0.

The first data set I pull out is ZIP Codes. I first create a data frame from my SQL query, add a CheckString variable to paste together the address components, and then call geo.dsk to perform lookups for each CheckString in zips. The results get converted into a data frame, which becomes my new zips, and I’ll use RODBC’s sqlSave function to save the data in a staging table that I’ll call ZipStage. We do the same thing for cities.

Now that I have latitudes and longitudes, I’m going to import them from my staging table into the SQLSaturdayAttendee table. Note that I didn’t paste together a massive update statement for the 9000-ish results; instead, I put them in a staging table, as that’s going to be a lot more efficient than doing 9000 update statements.

UPDATE a

SET

Latitude = z.lat,

Longitude = z.long

FROM dbo.SQLSaturdayAttendee a

INNER JOIN master.dbo.ZIPStage z

ON CONCAT(a.PostalCode, ' ', a.Country) = z.address

UPDATE a

SET

Latitude = c.lat,

Longitude = c.long

FROM dbo.SQLSaturdayAttendee a

INNER JOIN master.dbo.CitiesStage c

ON CONCAT(a.City, ' ', a.Region, ' ', a.Country) = c.address

AND a.PostalCode IS NULL

--Manual cleanup of Columbus, Ohio, which was geocoded to somewhere around Berea

UPDATE dbo.SQLSaturdayAttendee SET Latitude = 39.9612, Longitude = -82.9988 WHERE City = 'Columbus' AND Region IN ('Ohio', 'OH') AND PostalCode IS NULL;

One interesting thing I found was that the geocoding service put Columbus, Ohio up near Cleveland, which skewed Columbus results significantly. I’m not sure why it did that, but this leads me to believe that there are probably a few other geocoding errors that I didn’t catch. I don’t think they’re going to alter radically the results, however, so I didn’t spend any more time looking for them.

Who Speaks?

One consideration I had with this data set is that I didn’t want to include speakers and sponsors in the attendee set. Ideally, I’d keep them separated because their goals and motivations are different. For this data set, I’m really interested in seeing behavior patterns of non-speaker, non-sponsor attendees. But how do I figure out who’s an attendee and who’s a speaker when the data set is completely anonymized and there aren’t any flags for speakers or sponsors of events?

Twin Peaks

My expectation is that speaker/sponsor behavior will differ from “normal” attendee behavior. Here’s a quick data sample to show my conjecture in action. I’m going to query everybody in ZIP Code 27703 (which is where I live). See if you can figure out which one of these is me…

SELECT * FROM dbo.SQLSaturdayAttendee a WHERE a.PostalCode = '27703' ORDER BY a.NumberAttended DESC;

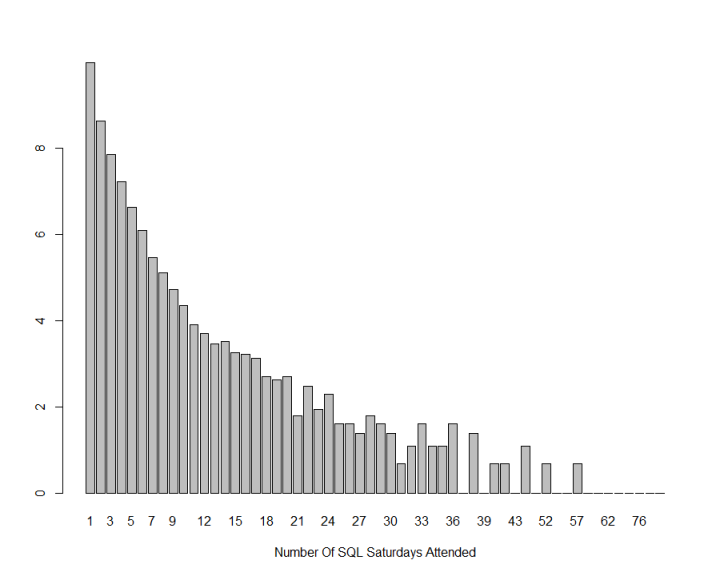

If I try to generalize this phenomenon, I’ll look for how many people attended (or at least registered for) a particular number of SQL Saturday events. Ideally, what I’d find is a lot of people with 1, 2, or 3 events; then a dip; then an increasing number of people at some point; and finally another dip.

I’m going to use a quick T-SQL query to get the raw data, and then analyze it in R because R is perfect for this type of quick analysis.

#Figure out the cutoff for likely speakers. cmd <- "SELECT NumberAttended, COUNT(1) AS People FROM PASSData.dbo.SQLSaturdayAttendee GROUP BY NumberAttended ORDER BY NumberAttended;" attendee.breakdown <- sqlQuery(conn, cmd) #A vast majority of entrants have been to one SQL Saturday. Let's take the log of the distribution #to see the pattern a bit better. barplot(xlab="Number Of SQL Saturdays Attended", attendee.breakdown$People, names=attendee.breakdown$NumberAttended) #What we see is an exponential decay pattern. This is unfortunate for us because we #were hoping to find a two-peaked distribution. barplot(xlab="Number Of SQL Saturdays Attended", log(attendee.breakdown$People), names=attendee.breakdown$NumberAttended)

We’re not looking for pretty; we’re looking for fast. If I wanted pretty, I’d pull down ggplot2 and spend a while sprucing up the graphics. Anyhow, here’s what you get when you run the first barplot:

That’s really hard to read because such a high percentage of attendees have been to just one SQL Saturday. So next up, I’m going to take the log of number of attendees. This will give us a better idea of the underlying distribution of data.

I believe the appropriate response at this point is, “Well, shoot.” We see a fairly smooth exponential decrease as number of SQL Saturdays attended goes up. There’s a little blip around the 13-14 mark, but you’d have to do some major squinting to make out a second distribution in the data. As a result, I don’t have a great bifurcation mark, and so I’m picking a quick and dirty number: 9. If you’ve been to at least 9 SQL Saturdays, you’ve probably gone from “casual attendee” to speaker (or at least active volunteer). It’s easier to think of counter-examples in places, like Jacksonville, which have had quite a few more SQL Saturdays than places like Omaha, but I think 9’s about as good a cutoff point as any.

Analyzing Attendees

So now, after about 3000 words, we get the main event. Who’s really going to these things? As usual, I’m going to start off with a T-SQL statement which frames my data nicely, and then perform analysis using R.

SELECT s.EventNumber, s.EventName, s.EventDate, s.City, a.UserGUID, a.NumberAttended, s.GeoPoint.STDistance(a.GeoPoint) / 1609.344 AS DistanceInMiles FROM PASSData.dbo.SQLSatRegistrations r INNER JOIN PASSData.dbo.SQLSaturday s ON s.EventNumber = r.SatNum INNER JOIN PASSData.dbo.SQLSaturdayAttendee a ON r.UserGUID = a.UserGUID WHERE a.Longitude IS NOT NULL AND (s.GeoPoint.STDistance(a.GeoPoint) / 1609.344) < 3000 AND a.NumberAttended < 9;

This is my data set, which returns 55019 rows. This means that we lost approximately half of our registrants as a result of data cleansing. Again, this should make us quite circumspect about predicting results, but I think we have enough data to do some descriptive analytics without making fools of ourselves.

One stipulation I want to make is that I’m cutting off any entries over 3000 miles. My belief is that nobody is flying cross-country to visit a SQL Saturday (again, excluding speakers and sponsors). The only exception I could see is Hawaii, so I’m probably skewing that result a bit…but even then, most of the long-distance travelers are probably speakers and sponsors going on vacation. If the distance I came up with is more than 3000 miles, I’m just treating it as bad data.

#Get non-speaker attendee distance from each event. cmd <- "SELECT s.EventNumber, s.EventName, s.EventDate, s.City, a.UserGUID, a.NumberAttended, s.GeoPoint.STDistance(a.GeoPoint) / 1609.344 AS DistanceInMiles FROM PASSData.dbo.SQLSatRegistrations r INNER JOIN PASSData.dbo.SQLSaturday s ON s.EventNumber = r.SatNum INNER JOIN PASSData.dbo.SQLSaturdayAttendee a ON r.UserGUID = a.UserGUID WHERE a.Longitude IS NOT NULL AND (s.GeoPoint.STDistance(a.GeoPoint) / 1609.344) < 3000 AND a.NumberAttended < 9;" attendee <- sqlQuery(conn, cmd)

From here until I get through the results, I’m going to throw in a bunch of images and single lines of code. I think the images describe what the code is doing, so I’ll mostly add color commentary instead of function call descriptions.

boxplot(attendee$DistanceInMiles, data=attendee, main="Attendee Distance Traveled", horizontal=TRUE)

This box and whisker plot shows a couple of things: the bottom 75th percentile doesn’t travel that far, and there are a lot of outliers in this data set. As useful as this visualization is, I also want to get a summary of results.

summary(attendee$DistanceInMiles)

The median distance is 19 miles, and the mean distance is 94 miles. 75% of attendees travel less than 70 miles in this data set. So where do we get 400-600 miles? Maybe it’s the 90th or 95th percentile.

quantile(attendee$DistanceInMiles, c(0.25, 0.5, 0.75, 0.9, 0.95))

95% of attendees travel under 400 miles. But how has this changed over time? I’m going to use the year function in lubridate and apply it to my distance in miles plot to group by year.

boxplot(attendee$DistanceInMiles~year(attendee$EventDate), main="Attendee Distance Traveled By Year", horizontal=TRUE)

This graphic is packed, but we can see a couple of things. First, the median has dropped a bit since 2007-2008. Second, the 75th percentile has also dropped a bit. I think both of these make a lot of sense: as we get more events, people don’t have to travel as far to attend a SQL Saturday. If the only one in your region is 300 miles away, you can justify going that far once a year for a unique event. But if there are 6 in your region over the course of a year, why not just pick the closest one or two?

Still, I want to see some hard numbers, so I’m going to call the summary function for each year.

tapply(attendee$DistanceInMiles, year(attendee$EventDate), summary)

$`2007`

Min. 1st Qu. Median Mean 3rd Qu. Max.

2.896 13.410 93.150 229.600 182.700 2147.000

$`2008`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.2401 10.5600 27.3500 159.9000 118.8000 2515.0000

$`2009`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.2845 9.6410 19.2300 116.2000 73.5600 2473.0000

$`2010`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.1588 10.1300 20.5500 120.6000 95.0500 2717.0000

$`2011`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.6631 10.5800 23.2100 123.3000 106.2000 2686.0000

$`2012`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.1913 9.7210 21.3200 113.0000 92.9900 2843.0000

$`2013`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0337 9.3650 19.7400 103.6000 72.5000 2710.0000

$`2014`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0337 8.5420 17.3000 84.3200 60.1100 2694.0000

$`2015`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0337 8.1410 16.5700 74.9300 49.7500 2700.0000

$`2016`

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0337 9.3220 19.8800 81.8100 57.3300 2685.0000

The mean has dropped considerably from over 110-120 miles down to 75-85 since 2014. 75% of attendees now won’t go more than about an hour for an event—or, maybe phrased differently, events are finding plenty of local attendees. Let’s see what it looks like at the 90th and 95th percentiles.

tapply(attendee$DistanceInMiles, year(attendee$EventDate), quantile, type=8, probs=c(0.25, 0.5, 0.75, 0.9, 0.95))

$`2007`

25% 50% 75% 90% 95%

13.29615 93.14978 185.26700 865.47610 1025.75327

$`2008`

25% 50% 75% 90% 95%

10.56431 27.35487 118.84406 457.74800 823.98637

$`2009`

25% 50% 75% 90% 95%

9.616829 19.226355 74.048157 204.863135 466.467323

$`2010`

25% 50% 75% 90% 95%

10.12820 20.55441 95.39654 224.65303 546.36305

$`2011`

25% 50% 75% 90% 95%

10.57865 23.21103 106.20535 235.10075 571.08523

$`2012`

25% 50% 75% 90% 95%

9.717188 21.323516 92.995248 250.098624 450.006102

$`2013`

25% 50% 75% 90% 95%

9.365244 19.742029 72.515673 215.197417 439.364723

$`2014`

25% 50% 75% 90% 95%

8.541756 17.301598 60.168109 177.018031 316.380895

$`2015`

25% 50% 75% 90% 95%

8.141225 16.571739 49.746705 155.265559 259.042488

$`2016`

25% 50% 75% 90% 95%

9.320905 19.878521 57.334977 155.104415 279.424947

Okay, I mentioned I’d focus mostly on color commentary. Here’s an exception: I specified type=8 for my quantile function. Which type you choose can make a huge difference in calculation. The link recommends type 8 when you don’t have a reason to go with a different calculation, but you can use any of the continuous calculation types. They will differ a bit (and you can see differences between what summary provided us and what quantiles gives us), but for this data set, I don’t think you get any crazy differences by selecting type 7 or 9.

Finally, this leads me to an interesting question: are we getting more registrants per event now than before? If we’re seeing event growth, attendee growth, and distance shrinkage, that indicates that SQL Saturdays are becoming more popular, drawing in people who previously wouldn’t travel the 20 miles across town to spend a day learning. Back to SQL Server once more.

SELECT YEAR(s.EventDate) AS EventYear, COUNT(DISTINCT s.EventNumber) AS NumberOfEvents, COUNT(1) AS NumberOfRegistrants, 1.0 * COUNT(1) / COUNT(DISTINCT s.EventNumber) AS AverageAttendance FROM dbo.SQLSaturday s INNER JOIN dbo.SQLSatRegistrations r ON s.EventNumber = r.SatNum GROUP BY YEAR(s.EventDate) ORDER BY EventYear DESC;

This time I included anybody who is in the list, regardless of whether we could geocode that person’s location. Given that 2016 was only about a third of the way over, I’m not sure we can put much credence into those registrant counts. But we have seen an increase over the years. It’s not a major increase, but it is an increase.

Conclusions

I’ve hedged my bets a few times during this post because I know the data’s incomplete, and there’s a risk that the points of the data set I’m missing would conclusively prove me wrong. Nevertheless, I think the likelihood of those extra data points being radically different than the ~50% that we were able to keep is fairly low, so I’ll make a couple of cautious conclusions.

First, non-speakers and non-sponsors do not appear to travel very far to SQL Saturdays. Looking at the yearly quantile breakout above, by the time we get to 2014, people have become a bit insular in their SQL Saturday attendance. The median traveler (after that initial event in Orlando in 2007) has never traveled more than about 20 miles.

Also, I think the 600 mile radius is too large, based on the data set. Looking at the 95th percentile of travelers, those people haven’t gone more than 400 miles on average since 2013, and the number has dropped down to the 260-280 range. Also, the 95th percentile, even in a heavily skewed distribution like this one, is looking far out there. Even if you scale it back to just the 90th percentile (which is still considered an edge case area), attendees since 2014 won’t even go 200 miles. That means that there could be some overlap between Cleveland and Columbus (which, having visited both the past couple of years, I do see), but there’d be almost no overlap between Cleveland and Cincinnati, and you’d be stretching to see much overlap between Columbus and Louisville.

But my real conclusion is this: grab the data set and draw your own conclusions. I’m working through this stuff the same as anybody else could, and we’re in a community full of people who know how to analyze data. Maybe you’ll find something I missed which either bolsters or undercuts one of my points; either way, I want to know and I want to push you to dig just a little deeper than I did. (But hopefully what you find won’t completely invalidate my points…)

I don’t think the 600-mile goal is about reducing competition for attendees.

I think it’s about reducing competition for SPONSORS. (This is kinda mentioned in their blog post.) They want to make sure that sponsors don’t have to deal with multiple events happening in near proximity on the same weekends because they can’t afford to sponsor all of them.

As a speaker & sponsor, I kinda understand it. If we had SQLSaturdays in Chicago and Indianapolis on the same weekend, for example, I definitely can’t go to both of them. If they’re on back-to-back weekends, I could theoretically go, but it’s much less likely because I’m missing out on family time two weekends in a row, and that’s a quick way to end up in the doghouse. (If I made my team travel, I wouldn’t want to ask them to do that, either.)

Can attendees go? Sure. But speakers & sponsors, that’s where things get tough. (I’m not arguing for the 600-mile rule, either, I think that one’s kinda crazy.)

As usual, Brent, you’re astute. The sponsor travel is the driving factor for this decision (and that’s not a swipe at either PASS or sponsors); we’ve done a great job at increasing the number of attendees and speakers at events, but we haven’t seen that same sort of exponential growth for sponsors in the community. There’s lot of vendors that I talk to about SQLSaturday and they’ve never heard of it.

The problem is that the new limits don’t address the problem of sponsor growth; it’s just a stick, with no carrot.

Excellent write-up, however.

Stuart – aww, thanks sir!

That’s a good point, although I can see it swinging the other way on occasion as well: think of Grant Fritchey’s Ohio tour or Kevin Kline’s southeastern tour. If there are a couple of SQL Saturdays which can serve as a nice bookend, it might be easier to get an evangelist to visit a particular region. But in the general case, I think it’d work about the way you’ve put it for sponsors.

There is an additional important consideration here, which is:

“Would the 600 mile radius and 3 weekend blackout create a situation where SQL Saturdays that satisfied the attendee travel limits could not be held?”

After all, nobody is saying that you can’t hold events within 600 miles of each other – just that there has to be at least a weekend of buffer between such events. You could definitely use graph theory to make some formal statements about the conditions under which such conflicts would be forced, but it may be simpler to run a monte carlo simulation that includes historical SQL Saturday dates/locations along with various random potential new events.

My wild guess is that a small number of events will be effectively locked out, a larger number of events will have to pick a weekend that is not their first/second choice, and the majority of events will be largely unimpacted. But that’s just a guess. If I’m right, we may just be able to create some kind of smart exception for the locked out events.

Kevin, are you willing to sign up for more analysis? 🙂

Scott – uh, I think the point is that the community shouldn’t have to do the analysis. If PASS is going to make that rule, then they should be doing the analysis before they make that decision, right?

The analysis comes BEFORE the decision, not the other way around.

I agree that the analysis *should* have been done before making a decision, but that ship has sailed.

We’re left with making a determination about whether or not the new rule needs to be repealed. I don’t think Kevin’s analysis, as it stands, is definitive on that question. It’s super valuable, but it only covers the demand side of the issue. We need to couple it with a supply side analysis. I’d donate my own time to do the supply side analysis, but this caught me one of the busier months in recent memory.

WRT who should be doing the analysis, I think it’s fine to lean heavily on the community for analysis – but it should be done, as you note, in context of a proposal rather than a decision.

Scott – thankfully, Argenis Fernandez already agreed on Twitter and said they’re revising this rule. PASS is now working on changing it to something else, and they’re going to announce the revisions soon.

One would like to assume they’re going to bring data analysis and a proposal this time, rather than a mandate. Fingers crossed.

Ah, thank you. I missed that update.

It is what isn’t being done or said that is a concern here.

I’ll sign up for a wiki page to have a public shared analysis made. The current wiki, http://wiki.sqlsaturday.com/ is in disrepair.

Thanks, Kevin. This is amazing.

Also, this should be a presentation at events.

Thank you for doing this. Are you using it as an end to itself, or are you hoping to see some change from it? If it is the latter, what is the best way to follow up with you? –Robert Sterbal 412-977-3526

Hey, Robert, thanks for the question. I have to admit that with the modification to the guidelines (http://www.sqlpass.org/Community/PASSBlog/tabid/1476/entryid/809/Addressing-Concerns-About-the-SQLSaturday-Driving-Radius.aspx), I don’t have a particularly strong opinion on what ought to happen.

If you are interested in the topic and want to drive change, a good place to look might be the SQL Server community Slack channel (https://dbatools.io/slack/) if you aren’t already on it. There have been some good discussions on the topic and several of the most active people in this discussion hang out there as well.